The 2026 Speaker Submission Guide: Stop Guessing, Start Designing

Run a call for papers that builds your agenda, respects your speakers, and saves time

.png)

.png)

When you’re running a call for speakers, you’re not just gathering content. You’re making decisions that shape the entire experience of your event. Every submission has the potential to become a standout session. Every speaker could be the reason someone registers, attends, or recommends your event to others.

But once the submissions start rolling in, things get complicated.

What begins as a thoughtful, strategic process quickly turns into a volume problem. You want to be fair. You want to be thorough. You want to give every submission the attention it deserves.

But when you’re managing hundreds of proposals, coordinating with multiple reviewers, and working without a clear system to guide the process, things get messy fast.

Scoring becomes inconsistent. Deadlines get missed. Reviewers apply different standards. And strong ideas can easily get lost in the noise.

It’s not a planning issue. It’s a process issue. And it’s one that most teams are still trying to solve with tools that weren’t designed for it.

We’ve heard it from so many event professionals. The evaluation phase often feels like a black hole.

You kick things off with good intentions, maybe even a solid plan. But once the reviews start coming in, things get murky.

Instead of feeling like a thoughtful selection process, it turns into a scramble.

Decisions get delayed. Reviewers lose steam. Your team spends more time managing the process than focusing on the quality of the content.

And worst of all, strong submissions can slip through the cracks. You lose the chance to elevate new voices or bring in diverse perspectives — not because the content wasn’t there, but because the process got in the way.

Evaluation is not just an administrative task. It is a core part of event programming.

The sessions you select shape the voice of your event. The speakers you put on stage represent your values, your community, and your brand. When the evaluation process is inconsistent or unclear, it does more than create confusion. It breaks trust.

Internally, it leads to misalignment between reviewers and decision-makers. Externally, it can make submitters feel overlooked or dismissed.

But when your evaluation process is consistent, everything changes. You create a clear path for selection, one that respects the time and effort your community puts into their submissions and makes it easier for your team to move forward with confidence.

Here’s what consistency delivers:

Consistency is not about creating rigid rules. It is about building a process that gives you clarity, confidence, and the ability to scale without losing control.

Fair does not mean perfect. It means structured, transparent, and consistent, especially when you are working at scale.

A strong evaluation process creates a shared understanding of what good looks like and gives your team the tools to apply that consistently across every submission.

Here’s what that system needs to include:

Most teams try to build this kind of structure with disconnected forms, shared docs, and spreadsheets. And that’s exactly where things start to break down.

When the process is manual and scattered, it becomes impossible to keep everyone on the same page. You lose visibility, consistency starts to slip, and strong content can fall through the cracks without anyone realizing it.

Fair evaluation requires a system that supports it — not one that constantly works against you.

Sessionboard’s Evaluations feature was designed specifically for speaker and session review. It is not a generic form tool. It was built with the reality of event programming in mind — high submission volume, complex reviewer assignments, and the need for fairness, speed, and transparency.

You can create structured Evaluation Plans that define your scoring criteria, grading scales, and reviewer assignments. Everything is aligned before the first submission is even reviewed.

Reviewers can be assigned automatically based on tracks, topics, tags, or areas of expertise. This ensures the right people are reviewing the right content without manual coordination.

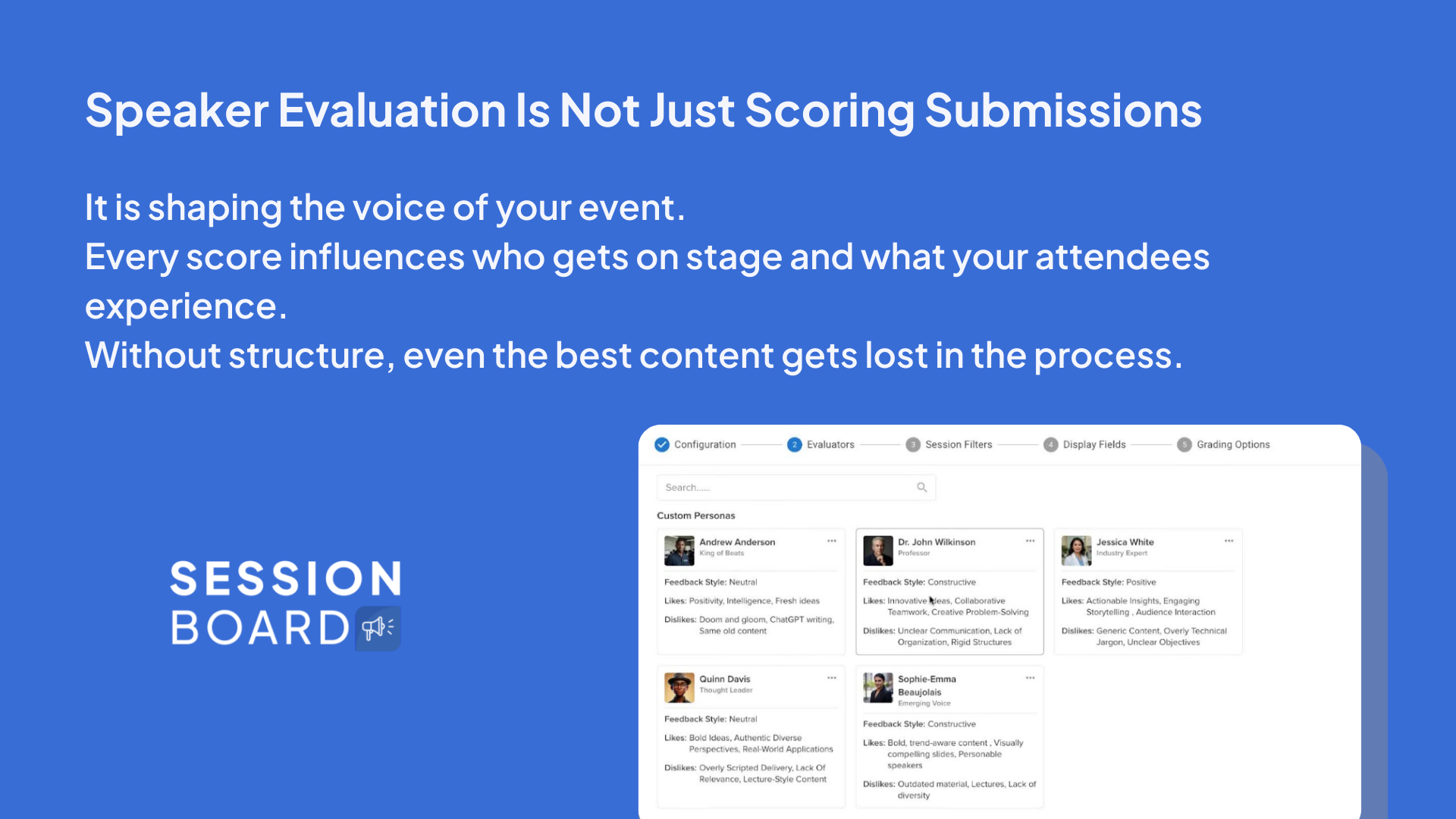

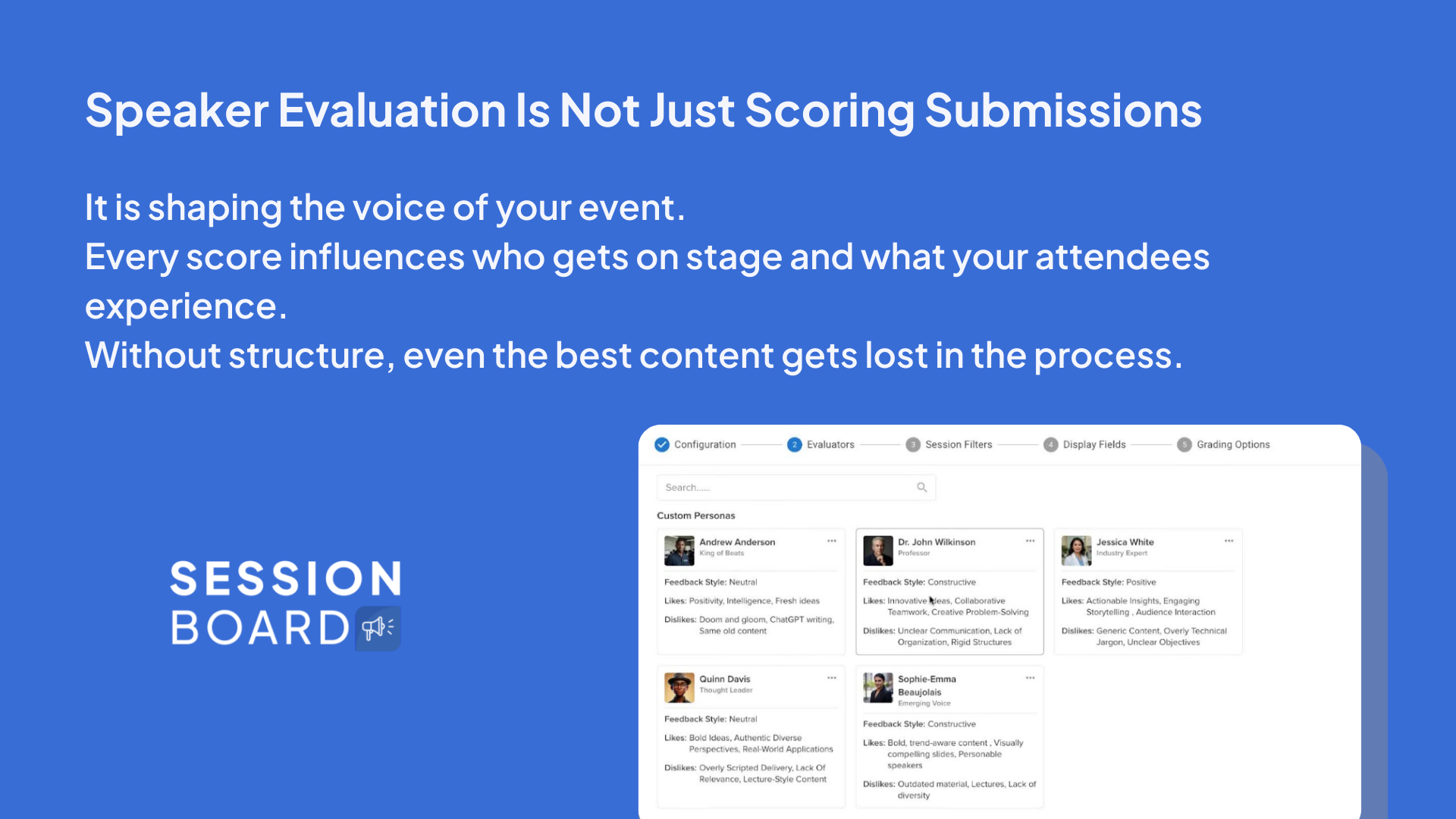

If you need help scaling the review process or want an additional layer of scoring, you can use Sessionboard’s AI-powered Evaluator Personas. These virtual reviewers generate consistent, contextual feedback based on your criteria and event goals — and everything they write is visible and fully auditable.

You can monitor progress in real time, quickly spot if a reviewer is falling behind, and step in before it creates a bottleneck.

Every score, every comment, and every decision is tied to the submission, reviewer, and timestamp. You always have the full picture.

And because it is connected to the rest of your speaker and session data, you are never copy-pasting from one tool to another or digging for context in spreadsheets. Everything lives in one place, built for the way events actually work.

When the evaluation process is structured, everything else gets easier.

Teams using Sessionboard report faster decision-making, even across large-scale calls for speakers. Reviewers stay aligned because expectations are clearly defined from the start. There are fewer conflicts, less second-guessing, and far less rework behind the scenes.

Progress can be tracked in real time. Feedback is standardized. And if a question ever comes up — whether from a team member or a submitter — you have a clear record of every score and comment. No guesswork. No scrambling to recreate decisions.

It is not just about saving time, although that happens too. It is about building a process that feels fair, reliable, and manageable, even when the volume grows.

One example: the team at Guru Media Hub used Sessionboard to evaluate over 250 speaker submissions with multiple reviewers. They were able to standardize scoring, surface top sessions quickly, and make confident programming decisions without sacrificing speed or inclusion.

The result was a smoother process, a more balanced agenda, and a better experience for everyone involved.

It is not just easier. It is better. And that is what matters most.

Speaker evaluations should not feel like a guessing game. And they should not require hours of manual coordination just to keep things on track.

Sessionboard gives you the structure, visibility, and flexibility to run evaluations at scale. You can stay organized, aligned, and fair — even when the volume grows and the timeline gets tight.

You do not have to sacrifice quality to move quickly. You just need a system that is built for the job.

When you’re running a call for speakers, you’re not just gathering content. You’re making decisions that shape the entire experience of your event. Every submission has the potential to become a standout session. Every speaker could be the reason someone registers, attends, or recommends your event to others.

But once the submissions start rolling in, things get complicated.

What begins as a thoughtful, strategic process quickly turns into a volume problem. You want to be fair. You want to be thorough. You want to give every submission the attention it deserves.

But when you’re managing hundreds of proposals, coordinating with multiple reviewers, and working without a clear system to guide the process, things get messy fast.

Scoring becomes inconsistent. Deadlines get missed. Reviewers apply different standards. And strong ideas can easily get lost in the noise.

It’s not a planning issue. It’s a process issue. And it’s one that most teams are still trying to solve with tools that weren’t designed for it.

We’ve heard it from so many event professionals. The evaluation phase often feels like a black hole.

You kick things off with good intentions, maybe even a solid plan. But once the reviews start coming in, things get murky.

Instead of feeling like a thoughtful selection process, it turns into a scramble.

Decisions get delayed. Reviewers lose steam. Your team spends more time managing the process than focusing on the quality of the content.

And worst of all, strong submissions can slip through the cracks. You lose the chance to elevate new voices or bring in diverse perspectives — not because the content wasn’t there, but because the process got in the way.

Evaluation is not just an administrative task. It is a core part of event programming.

The sessions you select shape the voice of your event. The speakers you put on stage represent your values, your community, and your brand. When the evaluation process is inconsistent or unclear, it does more than create confusion. It breaks trust.

Internally, it leads to misalignment between reviewers and decision-makers. Externally, it can make submitters feel overlooked or dismissed.

But when your evaluation process is consistent, everything changes. You create a clear path for selection, one that respects the time and effort your community puts into their submissions and makes it easier for your team to move forward with confidence.

Here’s what consistency delivers:

Consistency is not about creating rigid rules. It is about building a process that gives you clarity, confidence, and the ability to scale without losing control.

Fair does not mean perfect. It means structured, transparent, and consistent, especially when you are working at scale.

A strong evaluation process creates a shared understanding of what good looks like and gives your team the tools to apply that consistently across every submission.

Here’s what that system needs to include:

Most teams try to build this kind of structure with disconnected forms, shared docs, and spreadsheets. And that’s exactly where things start to break down.

When the process is manual and scattered, it becomes impossible to keep everyone on the same page. You lose visibility, consistency starts to slip, and strong content can fall through the cracks without anyone realizing it.

Fair evaluation requires a system that supports it — not one that constantly works against you.

Sessionboard’s Evaluations feature was designed specifically for speaker and session review. It is not a generic form tool. It was built with the reality of event programming in mind — high submission volume, complex reviewer assignments, and the need for fairness, speed, and transparency.

You can create structured Evaluation Plans that define your scoring criteria, grading scales, and reviewer assignments. Everything is aligned before the first submission is even reviewed.

Reviewers can be assigned automatically based on tracks, topics, tags, or areas of expertise. This ensures the right people are reviewing the right content without manual coordination.

If you need help scaling the review process or want an additional layer of scoring, you can use Sessionboard’s AI-powered Evaluator Personas. These virtual reviewers generate consistent, contextual feedback based on your criteria and event goals — and everything they write is visible and fully auditable.

You can monitor progress in real time, quickly spot if a reviewer is falling behind, and step in before it creates a bottleneck.

Every score, every comment, and every decision is tied to the submission, reviewer, and timestamp. You always have the full picture.

And because it is connected to the rest of your speaker and session data, you are never copy-pasting from one tool to another or digging for context in spreadsheets. Everything lives in one place, built for the way events actually work.

When the evaluation process is structured, everything else gets easier.

Teams using Sessionboard report faster decision-making, even across large-scale calls for speakers. Reviewers stay aligned because expectations are clearly defined from the start. There are fewer conflicts, less second-guessing, and far less rework behind the scenes.

Progress can be tracked in real time. Feedback is standardized. And if a question ever comes up — whether from a team member or a submitter — you have a clear record of every score and comment. No guesswork. No scrambling to recreate decisions.

It is not just about saving time, although that happens too. It is about building a process that feels fair, reliable, and manageable, even when the volume grows.

One example: the team at Guru Media Hub used Sessionboard to evaluate over 250 speaker submissions with multiple reviewers. They were able to standardize scoring, surface top sessions quickly, and make confident programming decisions without sacrificing speed or inclusion.

The result was a smoother process, a more balanced agenda, and a better experience for everyone involved.

It is not just easier. It is better. And that is what matters most.

Speaker evaluations should not feel like a guessing game. And they should not require hours of manual coordination just to keep things on track.

Sessionboard gives you the structure, visibility, and flexibility to run evaluations at scale. You can stay organized, aligned, and fair — even when the volume grows and the timeline gets tight.

You do not have to sacrifice quality to move quickly. You just need a system that is built for the job.

Stay up to date with our latest news

See how real teams simplify speaker management, scale content operations, and run smoother events with Sessionboard.